본문

| Autonomous Vehicle Platform and MSC-RAD4R Dataset

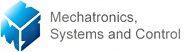

When researching autonomous driving, datasets are essential. There are various types of open-source datasets such as KITTI and nuScenes, but there are limitations in utilizing these datasets. This is because the environment for building open-source datasets and the operating environment of specific platforms are different. For this reason, we have built platforms for building datasets as shown below. The platforms are equipped with various sensors such as RGB camera, IR camera, LiDAR, 4D radar, GPS, IMU, etc. and allows users to build datasets at the time and place they want.

Fig. 1. MSC dataset construction platforms (Vehicle, RC car).

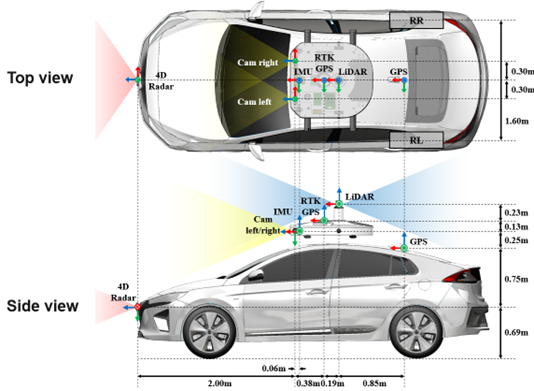

Fig. 3. MSC-RAD4R dataset sample including next-generation sensor 4D radar.

<Reference> [1] Choi, Minseong, et al. "MSC-RAD4R: ROS-Based Automotive Dataset With 4D Radar." IEEE Robotics and Automation Letters (2023).

[2] Park, Ji-Il, et al. "Development of ROS-based Small Unmanned Platform for Acquiring Autonomous Driving Dataset in Various Places and Weather Conditions." 2022 IEEE 46th Annual Computers, Software, and Applications Conference (COMPSAC). IEEE, 2022.

Detection and Tracking

In order for an autonomous vehicle to drive autonomously, it must be able to recognize the surrounding environment, estimate the location of the ego-vehicle, and generate a route to the target destination using the surrounding environment information and the location information of the ego-vehicle. To recognize the environment, we utilize object detection and tracking algorithms. Object detection network can be utilized to obtain class and location information about vehicles, pedestrians, etc. By utilizing the object tracking network, not only class and location information, but also identity information can be obtained. Therefore, by utilizing an appropriate network considering various factors such as the information required for autonomous driving and the situation of the ego-vehicle, it is possible to collect various information about the environment around the ego-vehicle.

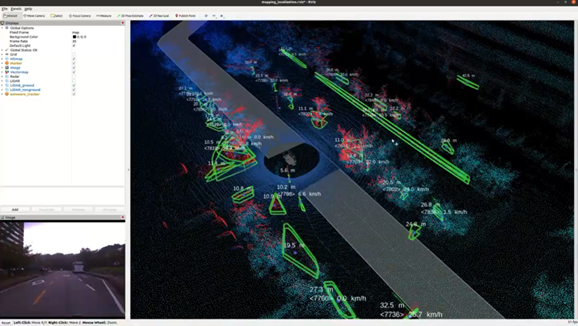

Fig. 4. Sample scene of localization on munji campus HD map and LiDAR based detection result.

Fig. 5. Vehicle pose estimation via extended kalman filter using 4D radar and camera.

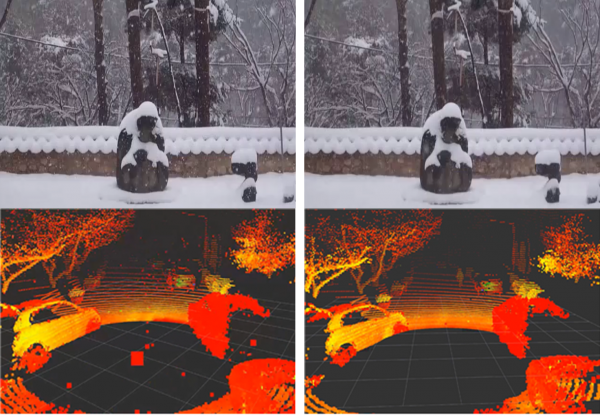

De-noise

<Reference> [1] Park, Ji-Il, Jihyuk Park, and Kyung-Soo Kim. "Fast and accurate desnowing algorithm for LiDAR point clouds." IEEE Access 8 (2020): 160202-160212.

[2] 전현용, et al. "모델 기반 이미지 디-스노잉 알고리즘 개발." 한국군사학논집 77.2 (2021): 364-381.

|